As Machine Learning (ML) takes center stage, it has transformed the way we interact with technology, enabling innovations across various sectors. From predicting patient outcomes in healthcare to optimizing financial operations, ML has become an essential tool for data-driven decision-making. This technology focuses on developing algorithms that allow computers to learn from and make predictions based on data, fundamentally changing how businesses operate and engage with their customers.

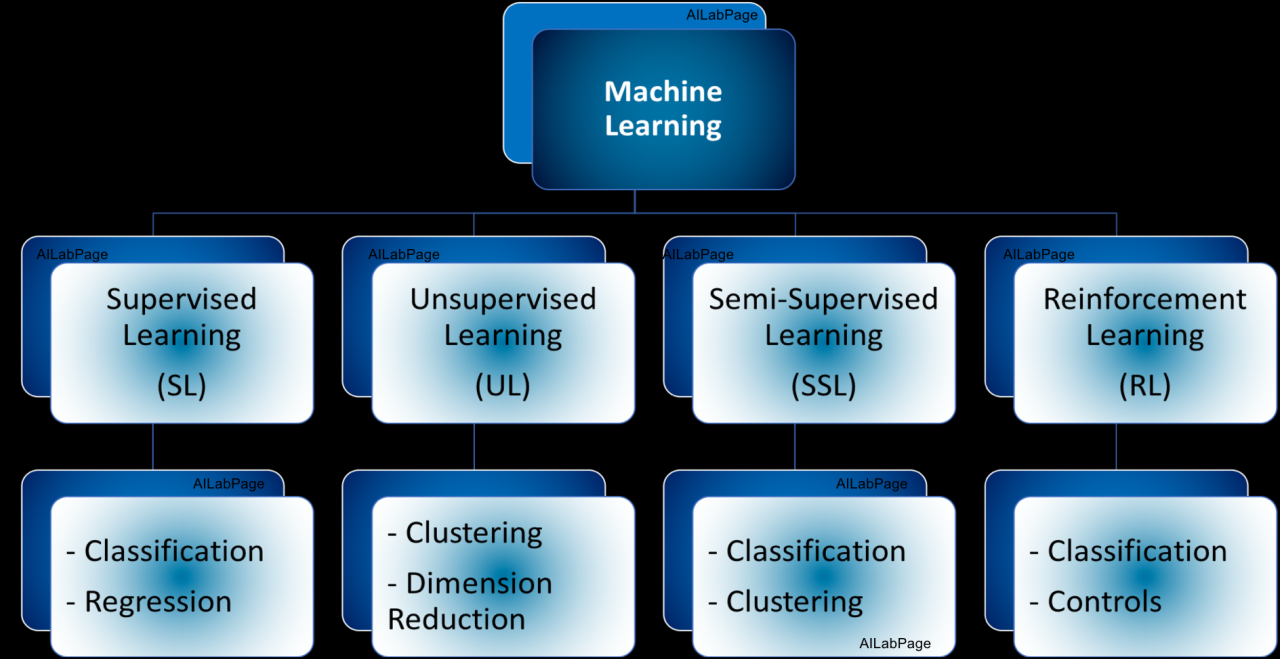

Understanding the core fundamentals and terminology of ML is crucial for anyone looking to delve into this dynamic field. Concepts like supervised and unsupervised learning, along with reinforcement learning, form the bedrock of machine learning applications. By exploring various algorithms and their practical applications, we can appreciate the immense potential of ML to enhance efficiency and drive innovation.

Machine Learning Fundamentals and Terminology are crucial for understanding this field.

Machine Learning (ML) is a subset of artificial intelligence that focuses on the development of algorithms and statistical models that allow computer systems to perform specific tasks without explicit instructions. Understanding the fundamental concepts and terminology is essential for anyone diving into this rapidly evolving field. This knowledge serves as the foundation for more complex topics and applications within machine learning.

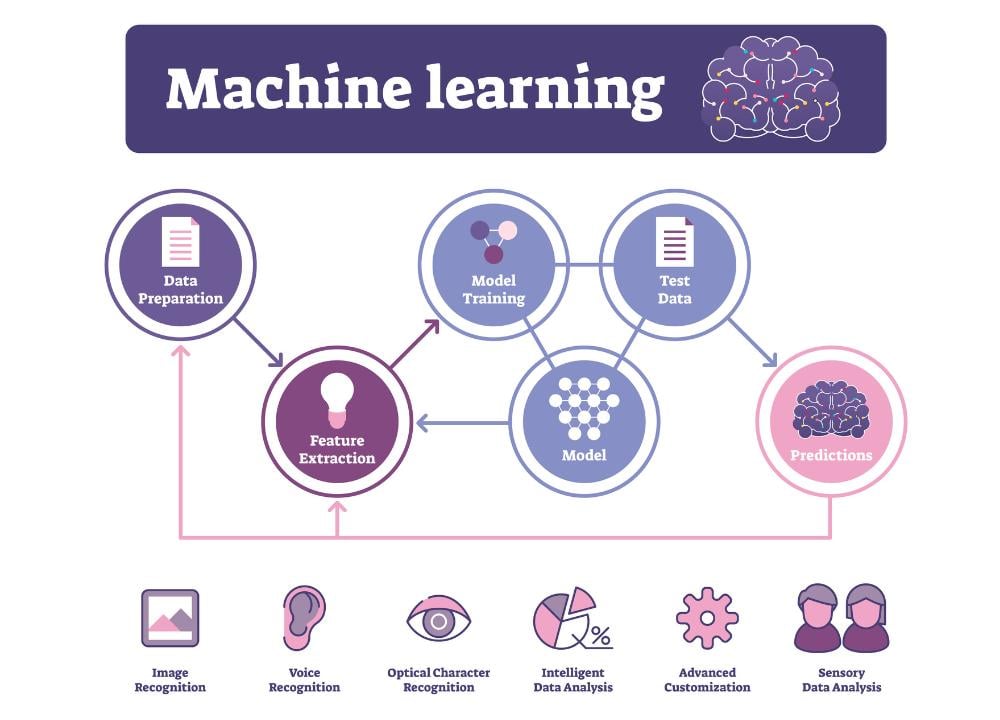

Key concepts in machine learning include data, features, models, training, and evaluation. Data refers to the collection of information that algorithms will learn from. Features are the individual measurable properties or characteristics of the data being analyzed. Models are mathematical representations that learn patterns in the data. Training involves using a dataset to create a model, while evaluation measures the model’s accuracy and performance on unseen data.

Differences Between Supervised, Unsupervised, and Reinforcement Learning Methods

Machine learning methods can be broadly categorized into three types: supervised learning, unsupervised learning, and reinforcement learning. Each method has distinct characteristics and applications.

Supervised learning involves training a model on a labeled dataset, meaning the input data is paired with the correct output. The goal is to learn a mapping from inputs to outputs. Common examples include:

- Linear Regression: Used for predicting continuous values, such as housing prices based on features like location, size, and amenities.

- Classification Algorithms: Such as Support Vector Machines or Decision Trees, used for tasks like spam detection in emails.

Unsupervised learning, on the other hand, works with unlabeled data. The algorithms attempt to identify patterns or groupings within the dataset without predefined outputs. Popular techniques include:

- Clustering Algorithms: Such as K-means, which can segment customers based on purchasing behavior.

- Dimensionality Reduction Techniques: Like Principal Component Analysis (PCA), used for image compression or noise reduction.

Reinforcement learning is a different approach where an agent learns to make decisions by taking actions in an environment to maximize cumulative reward. This method is prevalent in scenarios such as:

- Game Playing: Algorithms like AlphaGo have shown exceptional performance in games like Go.

- Robotics: Training robots to navigate and perform tasks autonomously through trial and error.

Understanding these methodologies is crucial for effectively applying machine learning techniques to real-world problems.

Common Machine Learning Algorithms and Their Applications

Numerous algorithms exist within the machine learning framework, each suited for specific types of problems. Familiarity with these algorithms can enhance the ability to select the appropriate one for a given task.

Some common algorithms include:

- Linear Regression: Applied in predicting numerical outcomes based on linear relationships.

- Logistic Regression: Used for binary classification tasks, such as predicting whether an email is spam or not.

- Decision Trees: A versatile algorithm for both classification and regression tasks, easy to interpret.

- Random Forest: An ensemble technique that improves accuracy by combining multiple decision trees.

- Neural Networks: Particularly useful in complex problems like image and speech recognition.

These algorithms have extensive applications across industries, from finance for credit scoring to healthcare for disease prediction and treatment recommendation systems. With the right understanding of machine learning fundamentals and terminology, individuals can harness the power of these algorithms to drive innovation and solve complex problems.

The role of data in machine learning models holds significant importance.

Data is the backbone of machine learning, dictating the success or failure of models. The quality and quantity of data play crucial roles in how well these models perform. High-quality data ensures that the model learns from relevant, accurate examples, while sufficient quantity supports the model’s ability to generalize from those examples. Without a solid foundation of data, even the most sophisticated algorithms can falter, resulting in poor predictions and insights.

The performance of machine learning models is intricately tied to the data they are trained on. Quality data, characterized by its accuracy, completeness, and relevance, allows models to learn the underlying patterns effectively. Conversely, noisy, missing, or biased data can lead the model to learn incorrect patterns, ultimately degrading its performance. Additionally, the volume of data influences model robustness; a larger dataset typically enables the model to recognize diverse scenarios, reducing overfitting and enhancing generalization capabilities. For instance, in image classification tasks, models trained on extensive datasets like ImageNet significantly outperform those trained on smaller datasets due to their exposure to a wider variety of images and scenarios.

Data Preprocessing Techniques

Data preprocessing is vital for transforming raw data into a format suitable for modeling. It addresses issues like noise, missing values, and irrelevant features, ensuring that the model can learn effectively. Key techniques include:

- Data Cleaning: This involves removing inaccuracies and inconsistencies from the dataset, correcting errors, and filling in missing values. Techniques such as mean imputation or using algorithms like k-nearest neighbors (KNN) can be employed to address gaps.

- Normalization and Standardization: Normalizing data scales it to fit within a specific range, while standardization transforms it to have a mean of zero and a standard deviation of one. Both techniques help in improving model convergence during training.

- Encoding Categorical Variables: Machine learning algorithms require numerical input, so categorical variables must be converted into a numerical format. Techniques such as one-hot encoding and label encoding are commonly used.

- Data Augmentation: Particularly in image processing, data augmentation techniques generate synthetic variations of existing data by applying transformations like rotation, flipping, or scaling to enhance the dataset’s diversity.

Feature Selection and Engineering

Feature selection and engineering are critical steps in the model-building process, directly influencing the model’s capability to learn effectively. Selected features should represent the underlying problem accurately while minimizing noise and redundancy. Effective feature selection can reduce overfitting, enhance model interpretability, and ultimately improve performance. Common methods for feature selection include:

- Filter Methods: These evaluate the importance of features based on statistical tests, allowing for the removal of irrelevant features before model training.

- Wrapper Methods: These involve evaluating feature subsets using predictive models, iteratively adding or removing features based on performance metrics.

- Embedded Methods: These utilize algorithms that have built-in feature selection processes, such as Lasso regularization, which penalizes less important features during model training.

Feature engineering involves creating new features from existing data, thereby enhancing the model’s ability to capture complex patterns. Techniques such as polynomial feature generation or interactions between features can provide additional insights that improve model accuracy. By investing time in data preprocessing and feature manipulation, data scientists can significantly enhance the performance and reliability of machine learning models.

Machine Learning Applications across various industries exhibit diverse functionalities.

Machine learning (ML) is increasingly transforming various industries by enabling advanced data analytics, automating processes, and driving innovation. With its capacity to analyze vast amounts of data and uncover patterns that humans may overlook, ML is being integrated into sectors like healthcare, finance, and automotive. Each of these industries presents unique applications and challenges, showcasing the versatility and impact of machine learning technologies.

Machine Learning in Healthcare

In the healthcare sector, machine learning applications have the potential to revolutionize patient care and operational efficiency. One prominent use case is in predictive analytics for patient outcomes. Hospitals are employing ML algorithms to analyze historical patient data, which helps in predicting the likelihood of readmissions and identifying patients at risk for certain diseases. For instance, Mount Sinai Health System utilized ML models to predict heart failure risk, improving preventive care measures.

Challenges in implementing ML in healthcare include data privacy concerns and the need for high-quality, standardized data. The integration of systems across different healthcare providers can be complex, and ensuring compliance with regulations like HIPAA adds another layer of difficulty. Moreover, the interpretability of ML models is crucial since healthcare professionals must understand the reasoning behind predictions to make informed decisions.

Another successful project is IBM’s Watson for Oncology, which analyzes vast medical literature and patient records to assist oncologists in developing personalized treatment plans, showcasing how ML can enhance clinical decision-making.

Machine Learning in Finance

The finance industry has embraced machine learning for various applications, notably in fraud detection and algorithmic trading. ML algorithms can analyze transaction patterns in real-time, enabling financial institutions to detect anomalies indicative of fraud. For example, PayPal employs machine learning to monitor transactions, significantly reducing fraudulent activity and enhancing customer trust.

Despite its benefits, implementing machine learning in finance comes with hurdles such as regulatory compliance and the challenge of managing unstructured data. Financial organizations must ensure their algorithms are transparent and explainable, particularly when algorithms influence credit scoring and lending decisions. Additionally, the rapidly changing financial landscape necessitates constant model recalibration and updates.

One notable success is JPMorgan Chase’s COiN (Contract Intelligence), which uses machine learning to analyze legal documents and extract relevant data efficiently, saving the bank millions of hours of manual labor while improving accuracy in documentation processes.

Machine Learning in the Automotive Sector

In the automotive industry, machine learning plays a crucial role in enhancing vehicle safety and enabling the development of autonomous driving technologies. ML algorithms are used for real-time analysis of sensor data, which allows vehicles to recognize obstacles, make driving decisions, and improve navigation systems. For instance, Tesla’s Autopilot uses machine learning to enhance its self-driving capabilities through continual learning from the data collected from its fleet.

Challenges in this sector include the need for extensive training data and the complexities involved in ensuring safety and reliability. The automotive industry faces regulatory scrutiny regarding the safety of autonomous vehicles, and manufacturers must address ethical considerations related to AI decision-making in life-and-death scenarios.

A significant achievement in this area is Waymo, which has successfully deployed a fleet of autonomous vehicles in select locations, showcasing the transformative potential of machine learning in reshaping transportation.

Different Types of Machine Learning Algorithms serve distinct purposes and functions.

Machine learning algorithms come in various forms, each designed to tackle different kinds of problems. Understanding these distinctions is crucial for selecting the right algorithm for a specific task or dataset. This section delves into the primary types of algorithms, emphasizing their unique functions and applications in real-world scenarios.

Classification and Regression Algorithms

Classification and regression algorithms are both types of supervised learning techniques, but they serve distinct purposes. Classification algorithms predict categorical outcomes, while regression algorithms predict continuous values.

Classification examples include:

- Logistic Regression: Used for binary classification tasks, such as predicting whether an email is spam or not based on its content.

- Decision Trees: Applicable in scenarios like diagnosing diseases based on patient data, where each branch represents a decision rule.

- Support Vector Machines (SVM): Effective in image recognition tasks, categorizing images based on labeled training data.

Regression examples include:

- Linear Regression: Commonly used to predict house prices based on features like size and location.

- Polynomial Regression: Useful for modeling relationships that are not linear, such as the trajectory of a rocket.

- Ridge Regression: Employed in situations where multicollinearity is present among predictors, improving predictions in fields like finance.

The choice between classification and regression hinges on the nature of the output variable: whether it’s categorical or continuous.

Clustering Algorithms

Clustering algorithms group similar data points into clusters without pre-labeled outcomes. They are pivotal in exploratory data analysis, enabling insights into data patterns and structures.

Common clustering techniques include:

- K-Means: Frequently used in customer segmentation to identify distinct customer groups based on purchasing behavior.

- Hierarchical Clustering: Useful in gene expression analysis, providing a tree-like structure that indicates the relationship among different genes.

- DBSCAN: Effective in spatial data analysis, such as identifying clusters of geographical locations with high crime rates.

Clustering algorithms excel in situations where the structure of the data is unknown, allowing for the discovery of inherent patterns.

Importance of Neural Networks and Deep Learning

Neural networks and deep learning represent advanced techniques that have transformed the machine learning landscape. They mimic the human brain’s functionalities, allowing them to learn complex patterns in data.

Key aspects of neural networks and deep learning include:

- Multi-Layer Perception (MLP): A basic form of neural networks that processes data through multiple layers, widely used in image classification tasks.

- Convolutional Neural Networks (CNN): Specialized for processing grid-like data, particularly effective in image analysis and recognition.

- Recurrent Neural Networks (RNN): Designed for sequential data, such as time series analysis and natural language processing, making them suitable for applications like chatbots.

Deep learning has shown significant efficacy in tasks requiring high levels of accuracy, such as medical diagnosis from imaging data. The ability of neural networks to learn from vast amounts of data is reshaping industries, from finance to healthcare, driving innovation and efficiency.

The process of training and evaluating machine learning models is critical for success.

Training and evaluating machine learning models is a meticulous process that significantly influences the effectiveness of the models developed. It involves careful preparation and assessment of both the data and the algorithms to ensure that the model generalizes well to new, unseen data. Understanding the steps involved in this process can lead to better decision-making and improved outcomes in various applications of machine learning.

Training Process and Validation Techniques

The training process of a machine learning model generally follows several key steps, which include data preparation, model selection, training, and validation. Initially, the data must be preprocessed and cleaned to remove noise and inconsistencies. This can involve normalization, handling missing values, and encoding categorical variables. Once the data is ready, it is commonly split into training and testing sets. This is crucial to evaluate the model’s performance on data it has not seen during training.

A typical split ratio might be 80% for training and 20% for testing, although this can vary depending on the dataset size. Additionally, techniques such as cross-validation are employed to ensure a more robust assessment of the model’s performance. Cross-validation involves partitioning the training data into multiple subsets and training the model on different combinations of these subsets, allowing for a comprehensive evaluation of its performance. For instance, k-fold cross-validation divides the data into k subsets, training the model k times, each time using a different subset as the testing set.

During training, the model learns from the data using algorithms such as linear regression, decision trees, or neural networks. After the training phase, validation techniques are applied to assess how well the model is performing. It is vital to avoid overfitting, which occurs when the model learns the training data too well, capturing noise rather than the underlying pattern. To combat overfitting, techniques such as regularization and early stopping can be implemented.

Evaluation Metrics for Model Performance

Evaluating the performance of a machine learning model involves using various metrics that provide insight into its effectiveness. Key metrics include accuracy, precision, recall, and F1-score. Accuracy measures the proportion of correct predictions made by the model. However, accuracy alone can be misleading, especially in cases of imbalanced datasets. In such scenarios, precision and recall become more critical.

Precision indicates the number of true positive results divided by the total predicted positives, while recall measures the number of true positives divided by the actual positives. The F1-score is the harmonic mean of precision and recall, providing a single measure that balances both metrics. It’s especially useful when seeking a balance between precision and recall in situations where the class distribution is uneven.

These metrics can be visualized using confusion matrices, which summarize the performance of the model by showing the true positives, false positives, true negatives, and false negatives. This provides a clear picture of where the model is succeeding and where it is falling short.

Importance of Cross-Validation and Overfitting

Cross-validation plays a critical role in ensuring that a machine learning model is robust and generalizes well to unseen data. By assessing the model’s performance across various subsets of data, it ensures that the results are not dependent on a specific random split of the dataset. This method provides a more reliable estimate of model performance and is essential in the decision-making process concerning model selection.

Overfitting is a common challenge in machine learning, particularly with complex models that may fit the training data too closely while failing to perform well on new data. Recognizing the signs of overfitting early in the training process is crucial. Strategies such as employing simpler models, adding dropout layers in neural networks, or utilizing regularization techniques can mitigate this issue. By maintaining a balance between fitting the training data and ensuring generalization, practitioners can enhance the performance and reliability of their machine learning models.

Ethical Considerations in machine learning must be addressed to ensure responsible use.

Machine learning (ML) is a powerful tool that can drive innovation and efficiency across various sectors. However, the ethical implications surrounding its deployment cannot be overlooked. The responsible use of ML requires a careful examination of algorithmic biases and their potential societal impacts, as well as data privacy concerns that arise from data-driven technologies.

Impact of Biased Algorithms on Society

Algorithmic bias occurs when an ML model reflects the prejudices present in its training data, resulting in unfair or discriminatory outcomes. This issue poses significant ethical implications, particularly when biased algorithms are used in sensitive areas such as hiring, lending, or law enforcement. For instance, a recruitment tool trained predominantly on data from successful male candidates may inadvertently favor male applicants over equally qualified female candidates. Such biases can perpetuate existing inequalities, leading to systemic discrimination in various sectors.

The impact of these biases on society can be profound. Biased algorithms can reinforce stereotypes, marginalize already disadvantaged groups, and erode trust in institutions that integrate these technologies. Moreover, as ML systems become more prevalent in decision-making processes, the consequences of algorithmic bias can lead to wider social disparities, affecting access to opportunities, services, and justice.

“Machine learning amplifies the consequences of bias if not addressed, creating a ripple effect throughout society.”

Data Privacy Concerns in Machine Learning Applications

Data privacy is another major ethical concern in the field of machine learning. The reliance on vast amounts of data for training models raises questions about how this data is collected, stored, and used. Many ML applications require personal information, which can expose users to unauthorized access or misuse.

With regulations like the General Data Protection Regulation (GDPR) in place, organizations are required to implement stricter measures to protect user data. GDPR mandates transparency in data processing and grants individuals rights regarding their personal information, including the right to access and erase their data. Non-compliance can lead to severe penalties, making it crucial for companies to adopt ethical data practices.

Regulations Affecting Machine Learning Technologies

A number of regulations are shaping the landscape of machine learning, promoting ethical practices and accountability. The GDPR is a prime example, affecting how organizations can use personal data in training algorithms. It emphasizes the need for explicit consent from users and mandates that data be anonymized where possible to protect individuals’ identities.

Similarly, the California Consumer Privacy Act (CCPA) provides residents of California with rights regarding their personal data, including the ability to opt-out of data sales and request disclosures about how their data is used. These regulations not only protect users but also hold organizations accountable for their data practices, ultimately fostering a more ethical approach to machine learning.

“Regulations like GDPR and CCPA are essential in guiding the ethical use of machine learning technologies.”

The Future of Machine Learning holds exciting possibilities and challenges.

The landscape of machine learning (ML) is evolving at an unprecedented pace, ushering in a future rife with both potential opportunities and challenges. With advancements in technology and increased data generation, new trends are emerging that are fundamentally reshaping the industry. As we delve into these trends, it becomes clear that the future of ML is not just about algorithmic enhancements but also about the broader implications of these technologies across various sectors.

Emerging trends in machine learning

Several key trends are currently steering the direction of machine learning. These developments not only enhance existing methodologies but also pave the way for innovative applications in diverse fields. The following points highlight some of the most significant trends to watch:

- Automated Machine Learning (AutoML): This trend simplifies the model-building process, making machine learning accessible to non-experts. AutoML tools allow for automatic selection, training, and tuning of models, streamlining workflows and reducing the need for extensive expertise.

- Federated Learning: This decentralized approach allows for collaborative model training without needing to share raw data. By using data from various sources while keeping it local, federated learning enhances data privacy and security, which is increasingly vital in today’s data-driven world.

- Explainable AI (XAI): As ML applications become critical in decision-making, understanding how models arrive at conclusions is essential. XAI focuses on making machine learning models more interpretable and transparent, ensuring that users can trust and validate their outputs.

- Transfer Learning: This technique allows models trained on one task to be adapted for another, vastly improving efficiency and performance with limited data. Transfer learning is particularly beneficial in fields like healthcare, where labeled data can be scarce.

- Integration of AI with IoT: The convergence of machine learning with the Internet of Things (IoT) is creating smarter environments. Devices equipped with ML algorithms can analyze and learn from data in real-time, leading to enhanced automation and predictive maintenance.

Implications of quantum computing on machine learning advancements

Quantum computing holds the potential to revolutionize machine learning by vastly increasing computational power. Unlike classical computers that process information in binary, quantum computers use qubits, enabling them to perform multiple calculations simultaneously. This capability can significantly accelerate the training of complex models and enhance optimization processes.

The implications of quantum computing on machine learning are profound. For instance, it can handle large datasets much more efficiently, thus accelerating the analytics process. Quantum-enhanced machine learning algorithms can also explore more complex feature spaces and derive insights from vast quantities of data that classic algorithms might struggle to process. As companies begin to realize the impact of quantum technologies, the integration of quantum computing into ML practices is likely to redefine how data-driven decisions are made.

Potential impact of artificial general intelligence on machine learning practices

Artificial General Intelligence (AGI) represents a level of intelligence comparable to human cognitive abilities. Its emergence could fundamentally alter machine learning practices by allowing systems to not only learn from data but also understand and reason about it on a deeper level.

The potential of AGI in machine learning includes improved adaptability and decision-making capabilities. AGI systems could learn from fewer examples, generalize better across tasks, and continuously improve without human intervention. This transition from narrow AI to AGI would lead to innovative applications across various industries, including healthcare, finance, and logistics. The integration of AGI could enable personalized learning experiences, more sophisticated predictive analytics, and advanced automation solutions that improve overall efficiency and productivity.

In conclusion, as we look to the future of machine learning, the interplay between emerging trends, quantum computing advancements, and the pursuit of artificial general intelligence will shape a landscape filled with opportunities and challenges that we can hardly begin to imagine.

Closure

In conclusion, the journey through the intricacies of Machine Learning (ML) reveals a landscape filled with opportunities and challenges. As we embrace this technology, it is vital to address ethical considerations and ensure responsible usage. With ongoing advancements, including the impact of quantum computing and the prospects of artificial general intelligence, the future of ML promises to be both exhilarating and transformative. As we continue to explore and innovate, the potential to revolutionize industries remains boundless.

FAQ

What is Machine Learning (ML)?

Machine Learning (ML) is a subset of artificial intelligence that focuses on the development of algorithms that enable computers to learn from and make predictions based on data.

How does supervised learning differ from unsupervised learning?

Supervised learning uses labeled data to train models, while unsupervised learning finds patterns in unlabeled data.

Can Machine Learning be used for real-time applications?

Yes, Machine Learning can be applied in real-time scenarios such as fraud detection, autonomous driving, and personalized recommendations.

What role does data quality play in ML?

High data quality is essential for creating effective ML models, as poor data can lead to inaccurate predictions and unreliable results.

Are there any certifications for learning Machine Learning?

Yes, various platforms offer certifications in Machine Learning, including online courses from institutions like Coursera, edX, and Udacity.