Containerization is revolutionizing the way software is developed and deployed, breaking down traditional barriers and providing a streamlined approach to the software development lifecycle. By allowing developers to package applications along with their dependencies into standardized units, containerization fosters consistency across different computing environments. This not only simplifies the deployment process but also enhances collaboration among development and operations teams, leading to faster and more reliable software releases.

The rise of containerization has introduced an array of tools and frameworks, such as Docker and Kubernetes, that empower organizations to manage containers at scale. These technologies enable teams to adopt DevOps practices, automate workflows, and optimize resource utilization. As businesses strive for agility and innovation, understanding the nuances of containerization becomes essential for staying competitive in the ever-evolving tech landscape.

Containerization as an Essential Technology in Modern Software Development

Containerization has rapidly become a pivotal technology in the realm of software development, reshaping how applications are built, shipped, and deployed. By encapsulating applications and their dependencies into standardized units, containerization enhances the software development lifecycle, providing consistency and efficiency across various stages. This approach has paved the way for more agile methodologies and has significantly impacted collaboration between development and operations teams.

Containerization has revolutionized the software development lifecycle by streamlining processes and minimizing conflicts associated with different environments. Traditionally, developers faced challenges in ensuring that applications functioned identically in various setups, leading to the infamous “it works on my machine” syndrome. Through containerization, developers can package software with all its dependencies, allowing for seamless deployment across any system that supports container technology. This consistency reduces bugs and accelerates the testing phase, significantly shortening time-to-market for new features and applications.

Advantages of Containerization for Developers and Operations Teams

The benefits of containerization extend beyond just enhancing development workflows. The following advantages illustrate why containerization is favored among development and operations teams alike:

- Portability: Containers can run on any environment that supports containerization platforms like Docker or Kubernetes. This means developers can build once and deploy anywhere, whether in a development, staging, or production environment.

- Scalability: Containers can be easily scaled up or down depending on the application’s demand. This flexibility allows teams to handle varying workloads without significant overhead.

- Resource Efficiency: Containers are lightweight compared to traditional virtual machines, allowing multiple containers to run on a single host without the need for extensive resources, thus maximizing efficiency.

- Isolation: Each container operates in isolation, which enhances security and stability. Issues in one container do not affect others, allowing for safer deployments.

Prominent tools and frameworks have emerged, solidifying containerization’s role in modern software development. Docker is perhaps the most widely recognized container platform, allowing developers to create, deploy, and manage containers effortlessly. Kubernetes, on the other hand, is an orchestration tool that automates the deployment, scaling, and management of containerized applications, making it essential for managing large-scale applications. Other notable tools include Apache Mesos and OpenShift, which further facilitate container management and orchestration.

In summary, containerization is not just a trend; it has become a core component of effective software development. By enhancing portability, scalability, and efficiency, it empowers developers and operations teams to work collaboratively towards delivering high-quality applications rapidly and reliably.

The Role of Docker in Containerization

Docker has emerged as a pivotal player in the world of containerization, changing how developers build, ship, and run applications. Its ability to encapsulate applications and their dependencies into lightweight containers has revolutionized software deployment, making it easier and more efficient. The popularity of Docker can be attributed to several key features that enhance its usability and integration within development workflows.

Key Features of Docker

Docker is widely recognized for its distinct features that contribute to its success in the containerization ecosystem. Understanding these attributes is crucial for leveraging Docker effectively in various application contexts. Here are three primary features:

- Lightweight Containers: Docker containers share the host OS kernel, which allows for faster startup times and reduced overhead compared to traditional virtual machines.

- Version Control and Image Management: Docker utilizes images that can be versioned, allowing developers to roll back to previous versions easily and manage application states through Docker Hub or private registries.

- Portability: Docker containers can run on any platform that supports Docker, ensuring consistent performance across different environments, such as local machines, cloud services, or on-premise servers.

Creating a Docker Container from Scratch

Creating a Docker container involves a straightforward process that allows developers to package their applications with all necessary dependencies. The following steps Artikel the essential components and actions required:

1. Install Docker: Begin by installing Docker on your system, ensuring that you meet the hardware and software requirements.

2. Write a Dockerfile: This text file defines the environment for your application. It includes instructions on how to build the container, such as the base image, application dependencies, and runtime configurations. A basic Dockerfile might include:

“`dockerfile

FROM ubuntu:latest

RUN apt-get update && apt-get install -y python3

COPY . /app

WORKDIR /app

CMD [“python3”, “app.py”]

“`

3. Build the Image: With the Dockerfile ready, build the image using the Docker CLI command:

“`

docker build -t my-app:latest .

“`

4. Run the Container: Finally, run the container from the built image using:

“`

docker run -d -p 5000:5000 my-app:latest

“`

This process encapsulates the application, making it easy to deploy and manage.

Integration with Other Technologies in the Container Ecosystem

Docker’s ability to integrate seamlessly with various technologies enhances its functionality and flexibility within the broader container ecosystem. Key integrations include:

– Orchestration Tools: Docker works in conjunction with orchestration systems like Kubernetes and Docker Swarm, which automate the deployment, scaling, and management of containerized applications.

– Continuous Integration/Continuous Deployment (CI/CD): Docker is compatible with CI/CD tools like Jenkins, GitLab CI, and CircleCI, enabling automated testing and deployment pipelines that can streamline the development workflow.

– Service Mesh Solutions: Technologies like Istio and Linkerd provide observability, traffic management, and security features for microservices deployed in Docker containers, improving the resilience and performance of distributed applications.

Docker’s robust ecosystem and its ability to work with these technologies facilitate the development of scalable, efficient, and manageable applications, making it a cornerstone of modern software engineering practices.

Comparing Containerization with Virtualization

Containerization and virtualization are two pivotal technologies in the realm of modern computing, often utilized to optimize resource management and application deployment. While both approaches aim to enhance software delivery, they do so in fundamentally different ways, with unique implications on efficiency, scalability, and performance.

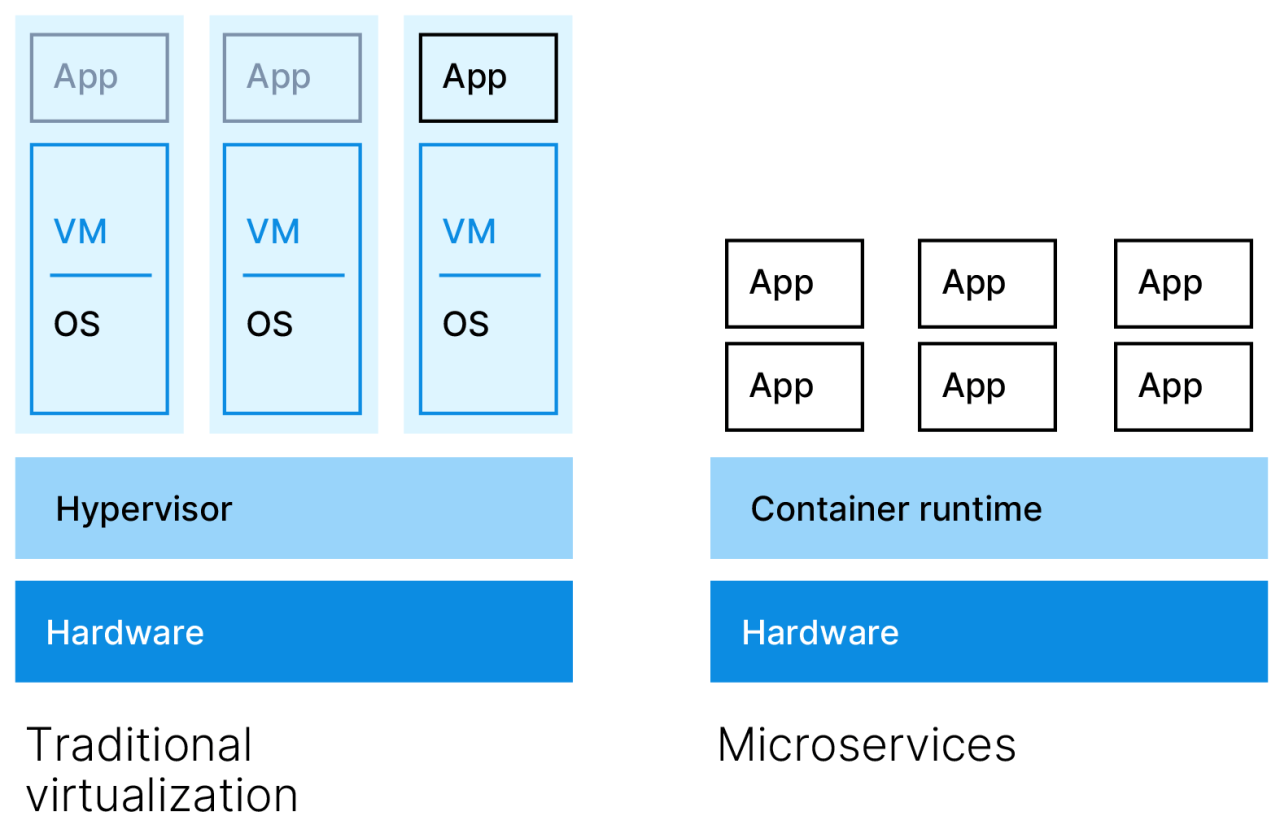

The core difference between containerization and virtualization lies in how resources are managed and utilized. Virtualization involves creating multiple virtual machines (VMs) that run on a hypervisor and emulate hardware resources. Each VM operates as an independent instance, complete with its own operating system, consuming significant resources. In contrast, containerization utilizes a shared operating system kernel to run multiple isolated user-space instances, known as containers. Each container packages an application and its dependencies, but they share the underlying OS, leading to reduced overhead.

Advantages of Containerization over Traditional Virtualization Approaches

Containerization presents several advantages compared to traditional virtualization, making it a preferred choice for many developers and IT operations:

1. Resource Efficiency: Containers are lightweight and share OS resources, which results in lower overhead compared to VMs that require separate OS instances. This allows more containers to run on the same hardware compared to virtual machines.

2. Faster Startup Times: Containers can start almost instantly, as they do not need to boot an entire operating system. This contrasts sharply with VMs, which can take several minutes to initialize.

3. Portability: Containers encapsulate the application and its environment, enabling them to run consistently across different environments, from development to production. This eliminates the “it works on my machine” problem associated with VMs.

4. Scalability: Container orchestration tools like Kubernetes facilitate the scalable deployment of applications, allowing for easier management and scaling of containerized apps compared to VMs.

5. Simplified DevOps Practices: The containerization model aligns seamlessly with microservices architectures, promoting DevOps practices and continuous integration/continuous deployment (CI/CD) pipelines.

To illustrate the differences in resource utilization between containers and VMs, consider the following comparison:

| Feature | Containers | Virtual Machines |

|---|---|---|

| Resource Overhead | Low | High |

| Startup Time | Seconds | Minutes |

| Isolation Level | Process-level | Hardware-level |

| Operating System | Shared | Separate for each VM |

| Scalability | Highly scalable | Less scalable |

The contrast in resource utilization reflects the inherent efficiencies of containerization, making it a compelling choice for modern application development and deployment scenarios. By leveraging lightweight containers, organizations can maximize their resource utilization, enhance agility, and facilitate a more dynamic approach to application management.

Security Challenges in Containerized Environments

Containerization has transformed the way applications are developed, deployed, and managed, offering enhanced flexibility and scalability. However, the adoption of containers also introduces unique security challenges that organizations must address to protect their applications and data. Understanding these vulnerabilities and implementing robust security practices is essential to safeguarding containerized environments.

Containerized applications are susceptible to various security vulnerabilities, primarily due to their shared kernel architecture. This characteristic can allow malicious actors to exploit vulnerabilities in one container to access others, potentially leading to data breaches or service disruptions. Additionally, reliance on third-party images can introduce unpatched vulnerabilities or malware. Containers also require configuration and management of orchestration tools, which can lead to misconfigurations that expose services to attacks. Such vulnerabilities underscore the need for a comprehensive security approach tailored to the unique challenges posed by containerization.

Potential Security Vulnerabilities in Containerization

Several key vulnerabilities can compromise the security of containerized environments. Recognizing these threats is crucial for teams aiming to enhance their security posture.

- Insecure Container Images: Many organizations use publicly available container images that may contain unpatched vulnerabilities or malware.

- Shared Kernel Vulnerabilities: Containers share the underlying host OS kernel, which can allow an attacker to exploit a vulnerability in one container to gain access to others.

- Misconfiguration: Incorrectly configured orchestration or network policies can expose services to unauthorized access or data leaks.

- Insufficient Isolation: Poorly designed container architectures can lead to insufficient isolation between containers, increasing the risk of lateral movement by attackers.

- Dependency Issues: Containers often rely on various libraries and dependencies, which can introduce vulnerabilities if not regularly updated.

Methods for Securing Containerized Applications

To mitigate security risks, organizations can adopt several strategies throughout the development lifecycle of containerized applications. Implementing these methods can significantly enhance security and reduce vulnerabilities.

- Image Scanning: Regularly scanning container images for known vulnerabilities before deployment is essential. Tools like Clair or Trivy can automate this process.

- Least Privilege Principle: Running containers with the least privileges necessary limits the potential damage from any security incidents.

- Runtime Protection: Implementing runtime security tools can help monitor container activity and detect suspicious behavior in real-time.

- Network Policies: Utilizing network segmentation and policies can restrict communication between containers and minimize the attack surface.

- Regular Updates: Keeping container images and dependencies up-to-date is crucial to addressing known vulnerabilities and reducing security risks.

Best Practices for Enhancing Container Security

Adopting best practices is vital for strengthening container security across the development lifecycle. Teams should incorporate these practices into their workflows to build more secure applications.

- Use Trusted Base Images: Always start with trusted base images from reputable sources to minimize vulnerabilities.

- Implement CI/CD Security: Integrate security checks within the Continuous Integration/Continuous Deployment (CI/CD) pipeline to identify and address vulnerabilities early.

- Monitor and Audit: Regularly monitor container activity and perform audits to detect anomalies and ensure compliance with security policies.

- Establish Incident Response Plans: Having a robust incident response plan in place ensures quick action can be taken in case of a security breach.

- Educate Teams: Ongoing training and education for development and operations teams on container security best practices fosters a security-first culture.

The Future of Container Orchestration

As the tech landscape continues to evolve, container orchestration has become a pivotal element in managing cloud-native applications. The evolution of these orchestration tools, particularly Kubernetes, marks a significant turning point in how developers deploy and manage applications in containerized environments. This discussion will explore the ongoing advancements and the promising future of container orchestration technologies.

Kubernetes’ Evolution and Significance

Kubernetes has emerged as the dominant player in container orchestration, revolutionizing the deployment and scaling of applications. Originally developed by Google, Kubernetes has grown to become an open-source standard that facilitates the automation of deployment, scaling, and operations of application containers across clusters of hosts. Its adaptability and extensive community support have led to a robust ecosystem of tools and integrations, making it indispensable for organizations adopting cloud-native strategies.

Some key aspects that illustrate Kubernetes’ significance include:

- Extensibility: Kubernetes supports a range of plugins and extensions, enabling organizations to customize their deployments to fit specific needs.

- Declarative Configuration: Users can define the desired state of their applications, allowing for automatic adjustments and recovery, which enhances reliability.

- Community and Ecosystem: A vast community contributes to continuous development, providing an array of tools and services that complement Kubernetes, further simplifying orchestration tasks.

Scalability and Resource Management in Cloud Environments

Container orchestration plays a crucial role in enhancing scalability and optimizing resource management within cloud environments. By automating the deployment and scaling of applications, orchestration tools allow businesses to respond dynamically to varying workloads.

The impact of container orchestration on these factors can be summarized as follows:

- Auto-scaling: Kubernetes can automatically adjust the number of running containers in response to resource demands, ensuring optimal performance without manual intervention.

- Resource Allocation: Orchestration tools optimize resource utilization by distributing workloads efficiently across available infrastructure, reducing waste and lowering costs.

- Load Balancing: Integrated load balancing ensures that traffic is evenly distributed across containers, enhancing reliability and performance of applications.

Emerging Trends and Technologies in Container Orchestration

The landscape of container orchestration is continuously evolving, driven by emerging trends and technologies that promise to shape its future. Notable trends include:

- Serverless Computing: Integrating serverless architectures with container orchestration can lead to even greater efficiency and resource management, allowing developers to focus on code rather than infrastructure.

- AI and Machine Learning: Implementing AI-driven analytics into orchestration tools can enhance decision-making, improving resource allocation and predictive scaling capabilities.

- Edge Computing: As the demand for low-latency applications grows, orchestration tools are adapting to manage containerized workloads at the edge, improving performance for IoT applications and services.

Real-World Applications of Containerization Across Industries

Containerization has emerged as a game-changing technology across various sectors, enabling organizations to enhance their operational efficiency, scalability, and deployment speed. Companies are leveraging this technology to streamline their development processes, improve resource utilization, and facilitate seamless collaboration among teams. The adaptability of containerization supports diverse applications, illustrating its significance in today’s tech-driven landscape.

Containerization not only simplifies application deployment but also addresses several challenges organizations face in their digital transformation journeys. From managing microservices to maintaining consistency in development and production environments, businesses have turned to containerization to solve complex operational issues. Below are illustrative case studies that showcase successful implementations and the hurdles faced by organizations during their containerization endeavors.

Case Studies of Successful Containerization Implementations

Numerous organizations have integrated containerization into their operations with notable success. For instance:

– Spotify transformed its music streaming service by adopting containerization to improve its service delivery and scale its development teams. By using Docker containers, Spotify managed to consistently deploy new features while maintaining system reliability.

– Netflix employs containerization extensively to manage its microservices architecture, allowing for rapid deployment and easy scaling of services. Challenges included ensuring security across numerous containers and managing dependencies.

– The New York Times has utilized containerization to streamline their content delivery. The adoption of Kubernetes for container orchestration helped them enhance resource efficiency and dynamic scaling, though they faced difficulties in migrating legacy applications to this modern infrastructure.

“Containerization allows teams to innovate at a faster pace while maintaining system stability.”

Challenges Faced During Containerization Journeys

While containerization offers numerous advantages, organizations have encountered specific challenges as they embarked on this journey. Key issues included:

– Complexity in Migration: Transitioning existing applications to containers often involves significant rewrites, which can be resource-intensive and time-consuming.

– Security Concerns: Ensuring the security of containerized applications and managing vulnerabilities within containers remain critical challenges.

– Training Requirements: Teams may require training to effectively manage container orchestration tools, adding to the initial investment in time and resources.

Industries Benefiting from Containerization

Containerization has permeated various industries, each deriving unique benefits tailored to their operational needs. Here is a list of industries that have successfully implemented containerization and specific applications:

– Finance:

– Used for high-frequency trading applications to ensure rapid deployment and minimal downtime.

– Healthcare:

– Facilitates the development of medical applications that require secure and compliant environments for patient data.

– Retail:

– Supports e-commerce platforms that need to scale quickly during peak shopping seasons, ensuring consistent user experiences.

– Telecommunications:

– Enhances network management systems, allowing for quick adjustments to services and better resource allocation.

– Gaming:

– Enables developers to deploy game updates and patches rapidly, improving user engagement through consistent content delivery.

“The adaptability of containerization across industries is a testament to its versatility and effectiveness.”

– Education:

– Supports learning management systems that require reliable performance and the ability to scale based on enrollment fluctuations.

– Automotive:

– Streamlines the development of in-car software systems that demand high reliability and frequent updates.

Through these case studies and industry insights, it is evident that containerization is not just a trend; it’s a fundamental shift in how modern organizations operate, enhancing their ability to innovate and respond to market demands efficiently.

Best Practices for Implementing Containerization in Enterprises

Containerization has emerged as a transformative technology for enterprises, enabling agility, scalability, and efficiency in software development and deployment. However, adopting this technology requires careful planning and execution. This guide will navigate the essential steps for a successful transition to containerized environments while addressing common pitfalls and fostering a container-native culture.

Steps for Adopting Containerization

Implementing containerization involves several key steps that ensure a seamless transition. Each step builds upon the previous one, creating a structured approach to adoption.

- Assess Current Infrastructure: Understand your existing architecture, applications, and workflows to identify areas that can benefit from containerization.

- Choose the Right Tools: Select container orchestration tools (like Kubernetes or Docker Swarm) and CI/CD pipelines that align with your organization’s needs.

- Start Small: Begin with non-critical applications to test containerization processes and validate the technology before scaling up.

- Develop a Migration Strategy: Create a detailed plan outlining how applications will be transitioned to containers, including timelines and resource allocations.

- Implement Security Measures: Ensure that security best practices are integrated from the start, including image scanning and network policies.

- Monitor Performance: Utilize monitoring tools to track the performance of containerized applications and infrastructure, allowing for adjustments as needed.

- Scale Gradually: After initial successes, slowly expand containerization to more applications while continuously refining processes based on feedback.

Common Pitfalls in Transitioning to Container Technologies

Transitioning to container technologies can present several challenges. Awareness of these pitfalls helps in strategizing effective mitigation measures.

Underestimating the complexity of microservices architecture can lead to increased operational overhead.

Some pitfalls to consider include:

- Inadequate Training: Failing to equip teams with proper training in container technologies can hinder successful implementation.

- Poor Planning: Skipping the assessment phase often results in unoptimized resource usage and application performance issues.

- Ignoring Networking Challenges: Not addressing networking complexities leads to communication issues between containers.

- Neglecting Backup and Recovery: Overlooking data management can result in data loss in case of container failures or crashes.

Fostering a Container-Native Culture

Creating a container-native culture within an organization is essential for maximizing the benefits of containerization. This culture promotes agility, collaboration, and innovation.

A culture that embraces continuous learning and experimentation can significantly enhance the success of containerization efforts.

To foster this environment, organizations should:

- Encourage Collaboration: Break down silos between development and operations teams to promote a DevOps mentality.

- Support Continuous Learning: Provide ongoing training opportunities and resources to keep teams up-to-date with the latest container technologies.

- Promote Experimentation: Allow teams to experiment with new ideas and technologies, creating a safe space for innovation.

- Recognize Contributions: Acknowledge and reward team efforts in adopting and optimizing container practices.

Monitoring and Logging in Containerized Applications

In the rapidly evolving landscape of software development, containerization has emerged as a game-changer, enabling developers to build, ship, and run applications in a consistent environment. However, with this new paradigm comes the need for robust monitoring and logging solutions tailored specifically for containerized applications. Effective monitoring not only ensures the health and performance of applications but also aids in troubleshooting and optimizing resource usage.

Monitoring solutions that are designed for containerized environments play a crucial role in maintaining system integrity and performance. Containers are ephemeral and dynamic, which means they can scale up or down quickly, and their lifecycles are often short-lived. This makes traditional monitoring methods inadequate. A tailored monitoring solution offers real-time insights into container performance, resource consumption, and alerts for any anomalies. Moreover, it aids in visualizing the overall architecture, providing metrics that can guide resource allocation decisions and help in identifying bottlenecks before they impact the user experience.

Overview of Popular Logging Frameworks Compatible with Containerization

Logging is equally vital in a containerized environment, as it allows developers and operators to track application behavior and diagnose issues across multiple containers. The right logging framework helps in aggregating logs, facilitating easier searches and analysis.

A few popular logging frameworks that are commonly used with containerized applications include:

– Fluentd: A flexible, open-source data collector that unifies the data collection and consumption processes. It can gather logs from various sources and route them to different destinations.

– Logstash: Part of the Elastic Stack, Logstash is a powerful tool for managing events and logs. It collects, parses, and stores logs for later use in analytics.

– Promtail: A log collection agent that integrates seamlessly with Grafana Loki, Promtail gathers logs and sends them to Loki for storage and visualization.

– Graylog: An open-source logging platform for log management that offers powerful search and analysis capabilities, tailored for complex environments including containers.

The right choice of logging framework can be pivotal in ensuring that logs are structured in a way that supports effective analysis.

Summary of Monitoring Tools and Their Features

Understanding the capabilities of various monitoring tools is essential for effective container management. Below is a table summarizing popular monitoring tools along with their key features:

| Monitoring Tool | Key Features |

|---|---|

| Prometheus | Time-series database, powerful querying language, and support for alerting. |

| Datadog | Integrated monitoring and analytics platform with APM, real-time dashboards, and anomaly detection. |

| Grafana | Open-source analytics and monitoring platform that integrates with various data sources, including Prometheus. |

| Sysdig | Container intelligence tools for monitoring, security, and troubleshooting. |

| New Relic | Full-stack observability with APM, infrastructure monitoring, and real-time insights. |

Wrap-Up

In summary, containerization stands as a pivotal technology that shapes the future of software development, offering advantages that range from improved resource efficiency to enhanced security protocols. As we look ahead, it’s clear that mastering containerization and its associated tools will be crucial for organizations aiming to thrive in a cloud-centric world. Embracing this technology not only unlocks the potential for innovation but also prepares businesses to navigate the complexities of modern software environments with confidence.

Questions and Answers

What is containerization?

Containerization is a lightweight virtualization method that allows applications to run in isolated environments called containers, ensuring consistency across various deployment stages.

How does containerization improve deployment speed?

Containerization packages applications with their dependencies, enabling faster, more reliable deployments and reducing the time spent on configuration and setup.

Can containerization be used with legacy applications?

Yes, legacy applications can be containerized, although it may require some adjustments or refactoring to fit into a containerized environment effectively.

What are the common challenges of containerization?

Common challenges include managing container orchestration, ensuring security, and maintaining visibility and monitoring across multiple containers.

Are containers the same as microservices?

No, while containers can host microservices, they are not the same. Microservices refer to an architectural style, while containers are a deployment method.