Hardware Acceleration sets the stage for this enthralling narrative, offering readers a glimpse into a story that is rich in detail and brimming with originality from the outset. In a world increasingly driven by data and speed, hardware acceleration emerges as a game-changer, enhancing the efficiency of computing systems and enabling them to tackle complex tasks at unprecedented speeds.

By utilizing specialized hardware components, such as GPUs, FPGAs, and ASICs, hardware acceleration boosts performance in various applications, from gaming and scientific research to machine learning and artificial intelligence. This advancement not only improves processing times but also opens doors to innovative solutions across multiple industries.

Hardware Acceleration

Hardware acceleration is a method where specific computing tasks are offloaded from general-purpose CPUs to specialized hardware components, which are designed to perform those tasks more efficiently. This concept has gained significant traction in recent years as applications become more demanding and require higher performance to deliver seamless user experiences. By delegating tasks such as graphics rendering, data processing, and machine learning computations to dedicated hardware, systems can achieve better speed, efficiency, and performance while freeing up the CPU for other processes.

Hardware acceleration is integral in various domains of technology and computing, reflecting its broad applicability. For instance, in the realm of graphics, dedicated GPUs (Graphics Processing Units) handle complex rendering tasks in video games and software applications, providing smooth graphics and high frame rates. Additionally, video decoding and encoding processes in streaming applications often utilize hardware acceleration to enhance performance while reducing power consumption. Machine learning frameworks leverage hardware accelerators like TPUs (Tensor Processing Units) to perform large-scale computations swiftly, further demonstrating the concept’s relevance across different fields.

Applications and Benefits of Hardware Acceleration

The implementation of hardware acceleration brings numerous advantages to computing systems, enhancing their capabilities in various ways. The following are key benefits that underscore the importance of integrating hardware accelerators into systems:

- Increased Performance: Hardware accelerators can execute specific tasks much faster than general-purpose CPUs. For example, a dedicated GPU can simultaneously process thousands of threads, significantly speeding up tasks like image recognition or video rendering.

- Energy Efficiency: Offloading tasks to specialized hardware can lead to lower power consumption, as these components are optimized for their designated functions. For instance, using a hardware encoder for video streaming reduces the CPU’s workload and power usage compared to software encoding.

- Improved User Experience: Applications that utilize hardware acceleration can deliver smoother and more responsive interactions. For example, in gaming, hardware-accelerated graphics result in richer visuals and more fluid gameplay.

- Scalability: Hardware acceleration allows systems to scale efficiently, as adding more specialized hardware can enhance performance without necessitating a complete overhaul of the existing infrastructure. This is evident in data centers that employ multiple GPUs or TPUs to handle vast datasets for machine learning tasks.

“Hardware acceleration is not just about speed; it’s about optimizing resources effectively to achieve better overall system performance.”

The Types of Hardware Used for Acceleration

In the landscape of computing, hardware acceleration plays a pivotal role in optimizing performance across various applications. By leveraging specialized hardware, tasks that are traditionally processed by the CPU can be offloaded to more efficient components, resulting in enhanced speed and efficiency. This section delves into the different types of hardware that support acceleration, specifically focusing on GPUs, FPGAs, and ASICs.

Hardware Components Supporting Acceleration

The primary components that facilitate hardware acceleration include Graphics Processing Units (GPUs), Field-Programmable Gate Arrays (FPGAs), and Application-Specific Integrated Circuits (ASICs). Each of these hardware types has unique characteristics that cater to different acceleration needs.

- Graphics Processing Units (GPUs): GPUs are designed to handle parallel processing tasks efficiently, making them suitable for graphics rendering and complex computations, such as machine learning and deep learning. With hundreds to thousands of cores, GPUs can process multiple tasks simultaneously, leading to significant performance improvements in data-intensive applications.

- Field-Programmable Gate Arrays (FPGAs): FPGAs offer flexibility in hardware configuration, allowing developers to program the logic circuits according to specific needs. This adaptability makes FPGAs ideal for applications requiring custom processing capabilities, such as real-time data processing and signal processing. FPGAs can achieve high performance with lower power consumption compared to traditional processors.

- Application-Specific Integrated Circuits (ASICs): ASICs are tailored for specific applications, which allows them to perform tasks with extreme efficiency. They are commonly used in cryptocurrency mining, video encoding, and network processing. While ASICs provide unmatched performance for their intended tasks, their lack of adaptability means they cannot be repurposed for other applications.

Comparative Analysis of Acceleration Capabilities

When comparing the performance of GPUs, FPGAs, and ASICs, several key factors come into play, including latency, throughput, and efficiency. Each hardware type excels in different areas, impacting their suitability for various use cases.

“GPUs are excellent for applications with high computational demands, while FPGAs offer customization and lower power consumption, and ASICs deliver unparalleled performance for targeted tasks.”

| Hardware Type | Performance Characteristics | Use Cases |

|---|---|---|

| GPUs | High parallel processing power, excellent for data-intensive tasks | Gaming, graphics rendering, machine learning |

| FPGAs | Customizable, moderate performance, lower power consumption | Real-time data processing, telecommunications, signal processing |

| ASICs | Maximum efficiency for specific tasks, limited versatility | Crytocurrency mining, video compression, networking |

Through this comparative analysis, it is evident that the choice of hardware for acceleration should be guided by the specific requirements of the task at hand, balancing between performance, adaptability, and power efficiency. The advancement in hardware technology continues to enhance the capabilities of these components, shaping the future of accelerated computing.

The Role of Software in Hardware Acceleration

The interaction between software and hardware is foundational to achieving significant performance enhancements through hardware acceleration. Understanding this relationship is crucial for developers and system architects who aim to optimize computing tasks, especially in environments demanding high efficiency, such as gaming, graphics rendering, and machine learning. The synergy between these two domains allows for more efficient processing and faster execution of complex tasks.

Software applications utilize hardware acceleration by offloading specific computational tasks to specialized hardware components, such as GPUs (Graphics Processing Units) or TPUs (Tensor Processing Units). This division of labor allows CPUs (Central Processing Units) to focus on general computing tasks while the specialized hardware manages intensive data processing. As a result, overall system performance improves, leading to faster render times, smoother graphics, and quicker data analysis. Popular examples include video encoding software that uses GPUs to accelerate transcoding processes, resulting in substantial time savings.

Programming Languages and Frameworks Supporting Hardware Acceleration

Several programming languages and frameworks are designed to facilitate hardware acceleration, making it easier for developers to harness the power of specialized hardware.

One notable language is CUDA (Compute Unified Device Architecture), which allows developers to write programs that execute across GPUs. This enables the parallel processing of data, which can significantly boost performance in applications like scientific simulations and deep learning.

Another influential framework is OpenCL (Open Computing Language), which provides a unified programming model for writing software that executes across heterogeneous platforms, including CPUs, GPUs, and other processors. By leveraging OpenCL, developers can maximize the performance of their applications across different hardware configurations.

Additionally, machine learning frameworks such as TensorFlow and PyTorch have built-in support for hardware acceleration. They simplify the process of deploying models on GPU or TPU hardware, allowing researchers and developers to train complex models faster and more efficiently.

The choice of programming language and framework can have a substantial impact on the performance of applications utilizing hardware acceleration. Selecting the right tools enhances productivity and ensures that the full potential of hardware resources is realized.

“The integration of robust software frameworks with advanced hardware capabilities can dramatically reduce processing times and improve computational efficiency.”

Applications of Hardware Acceleration in Different Industries

Hardware acceleration has become a crucial component across various industries, driving efficiency, speed, and innovation. By leveraging specialized hardware, businesses can enhance processing capabilities and optimize performance for demanding applications. This has led to a significant transformation in how industries operate and serve their customers, paving the way for more advanced technologies.

Gaming Industry

The gaming industry has significantly benefited from hardware acceleration through the integration of graphics processing units (GPUs) and dedicated gaming consoles. These advancements allow for improved graphics, faster rendering, and smoother gameplay experiences.

- Real-time Ray Tracing: This technology simulates realistic lighting, shadows, and reflections, enhancing visual fidelity in games. Titles like “Cyberpunk 2077” and “Control” utilize ray tracing to deliver immersive environments.

- Virtual Reality (VR): Hardware acceleration is essential in VR applications, where high frame rates and low latency are critical for user experience. Devices like the Oculus Quest and Valve Index leverage GPUs for realistic simulations.

- Cloud Gaming: Services such as NVIDIA GeForce Now and Google Stadia utilize powerful servers with hardware acceleration to stream high-quality games over the internet, enabling users to play on less powerful devices.

Scientific Computing

In scientific research, hardware acceleration plays a vital role in processing complex simulations and data analysis. High-performance computing (HPC) systems equipped with specialized processors can handle large-scale computations efficiently.

- Climate Modeling: Researchers employ hardware acceleration to simulate climate systems, allowing for more accurate predictions about global warming and its impacts, using frameworks like MPI (Message Passing Interface).

- Genomic Sequencing: The healthcare sector utilizes hardware acceleration to analyze vast amounts of genetic data, expediting the process of identifying genetic disorders and personalizing treatment plans.

- Physics Simulations: In particle physics, the Large Hadron Collider (LHC) relies on accelerated computing resources to process massive data sets from collisions, helping scientists explore fundamental questions about the universe.

Machine Learning

Machine learning has seen remarkable advancements through hardware acceleration, particularly with the use of tensor processing units (TPUs) and GPUs, which enhance the efficiency of training complex models.

- Deep Learning: Frameworks like TensorFlow and PyTorch leverage GPUs and TPUs to significantly reduce training times for neural networks, enabling faster development of applications such as image recognition and natural language processing.

- Autonomous Vehicles: Companies like Tesla use hardware acceleration to process data from numerous sensors in real-time, allowing self-driving cars to make instantaneous decisions based on their environment.

- Recommendation Systems: Platforms such as Netflix and Amazon utilize accelerated computing to analyze user behavior and preferences, improving the accuracy of content recommendations.

Emerging Trends in Hardware Acceleration

As industries continue to innovate, new trends are emerging in hardware acceleration that promise to shape the future.

- Edge Computing: The shift towards processing data closer to the source is driving the development of specialized hardware for IoT devices, enhancing real-time analytics and reducing latency.

- Quantum Computing: While still in its infancy, quantum hardware has the potential to revolutionize computing power, offering unprecedented capabilities for solving complex problems across various fields.

- Integration of AI in Hardware Design: Manufacturers are increasingly incorporating AI-driven designs in chips to optimize performance, enhance energy efficiency, and reduce costs.

Challenges and Limitations of Hardware Acceleration

The adoption of hardware acceleration in computing offers substantial performance improvements, yet it also presents a range of challenges and limitations. These can act as significant barriers to effective implementation, requiring careful consideration and planning. Understanding these challenges is vital for organizations looking to optimize their systems through hardware acceleration.

Technical Barriers and Limitations

Implementing hardware acceleration can introduce several technical barriers that need to be addressed. First, compatibility issues arise when integrating new hardware with existing systems. Legacy systems may not support the latest acceleration technologies, leading to potential performance bottlenecks or operational disruptions.

Another limitation is the complexity of programming and optimizing software for specific hardware configurations. This often requires specialized knowledge of hardware architecture, which can be a barrier for developers unfamiliar with such intricacies.

Additionally, the scalability of hardware acceleration can pose problems. As applications grow, the hardware must also scale accordingly, which may not be feasible without significant investment.

Cost Implications and Resource Requirements

The financial aspect of hardware acceleration is a critical factor to consider. Initial costs can be substantial, including expenses for new hardware, installation, and maintenance. Beyond the upfront investment, ongoing costs may arise from energy consumption and cooling requirements, especially when deploying high-performance computing systems.

Organizations must also factor in the resource requirements associated with training personnel to manage and utilize new technologies effectively. This can lead to additional expenses in terms of time and money, as staff may need to acquire new skills or knowledge to fully leverage hardware acceleration.

Potential Security Concerns

Security is a significant concern when utilizing hardware-accelerated systems. The introduction of specialized hardware can create vulnerabilities that may be exploited by malicious actors. For instance, certain hardware components might have firmware flaws that attackers can manipulate, leading to unauthorized access or data breaches.

Another security challenge is the proliferation of hardware-based side-channel attacks, which can exploit the physical characteristics of hardware to extract sensitive information. These vulnerabilities require constant vigilance and proactive security measures.

Furthermore, the reliance on specific hardware vendors can create supply chain risks. If a vendor experiences a breach or security incident, it may compromise the integrity of the entire system reliant on that hardware.

“Hardware acceleration can greatly enhance performance, but it also necessitates a careful evaluation of security risks and resource investments.”

Future Directions of Hardware Acceleration Technology

As hardware acceleration technology continues to evolve, it is essential to analyze both the current trends and future directions that are expected to shape this field. Ongoing research and innovative developments in this area promise to redefine performance benchmarks and efficiency across various applications. By exploring these advancements, we can gain insights into how hardware acceleration might transform industries in the coming years.

The future of hardware acceleration is being influenced by several key technologies, including artificial intelligence (AI), machine learning (ML), and edge computing. These innovations are driving a significant shift in how computations are executed, emphasizing the need for specialized hardware that can handle these complex tasks more efficiently. As a result, we witness a growing trend toward the integration of hardware acceleration in various devices and systems, ranging from smartphones to large-scale data centers.

Current Research and Developments in Hardware Acceleration

Recent research within the realm of hardware acceleration has highlighted several critical areas that indicate where the technology is headed. Notable efforts include the development of application-specific integrated circuits (ASICs) and field-programmable gate arrays (FPGAs), which are designed to optimize performance for specific tasks.

The advancements in these technologies can be linked to a few primary factors:

- Artificial Intelligence Optimization: Hardware accelerators are being designed to specifically enhance AI processing capabilities, allowing for faster and more efficient data analysis. For instance, Google’s Tensor Processing Units (TPUs) offer optimized performance for deep learning tasks, significantly reducing the time required for training neural networks.

- Energy Efficiency: As the demand for computational power increases, energy consumption becomes a critical concern. New designs focus on minimizing power usage without compromising performance, exemplified by low-power GPUs that maintain high throughput for machine learning applications.

- Edge Computing Growth: The shift towards computing closer to data sources necessitates hardware acceleration at the edge. This trend is being met with innovations in compact, efficient processors capable of handling AI tasks locally, reducing latency and bandwidth usage.

Key Players Advancing Hardware Acceleration

A number of prominent companies and organizations are leading the charge in hardware acceleration technology. These players are not only developing cutting-edge products but also influencing industry standards and future research directions. The following entities stand out in their contributions:

- NVIDIA: Renowned for their GPUs, NVIDIA has been at the forefront of integrating hardware acceleration in AI and gaming. Their CUDA platform enables developers to harness GPU power for varied applications, driving significant advancements in processing capabilities.

- Intel: With a strong focus on integrating hardware acceleration across its chipsets, Intel is advancing architectures that support both traditional computing tasks and specialized workloads such as AI and machine learning.

- AMD: As a competitor in the GPU market, AMD has been developing hardware that emphasizes both performance and power efficiency, catering to gaming and professional workloads alike. Their RDNA architecture is a prime example of this commitment.

- Google: Through its development of TPUs and ongoing innovations in AI hardware, Google is setting benchmarks for performance in cloud computing and AI tasks, emphasizing scalability and efficiency.

In summary, the landscape of hardware acceleration technology is poised for significant transformation driven by ongoing research, evolving technologies, and key industry players. As these trends continue to develop, we can expect hardware acceleration to play an increasingly vital role in shaping the future of computing.

Building a Hardware Acceleration System

Designing a hardware acceleration system requires careful planning and execution to ensure optimal performance and compatibility with existing technologies. This guide will Artikel the key considerations and steps to take when building a system that incorporates hardware acceleration, along with tips for performance optimization.

Step-by-Step Guide for Designing a Hardware Acceleration System

Creating a hardware acceleration system involves several critical stages. Below are the essential steps to follow:

1. Define Requirements: Identify the specific applications or tasks that will benefit from hardware acceleration. This will help in selecting the right type of accelerator, such as GPUs or FPGAs.

2. Select Appropriate Hardware: Choose the hardware components based on performance needs, budget, and compatibility. Factors to consider include processing power, memory capacity, and energy efficiency.

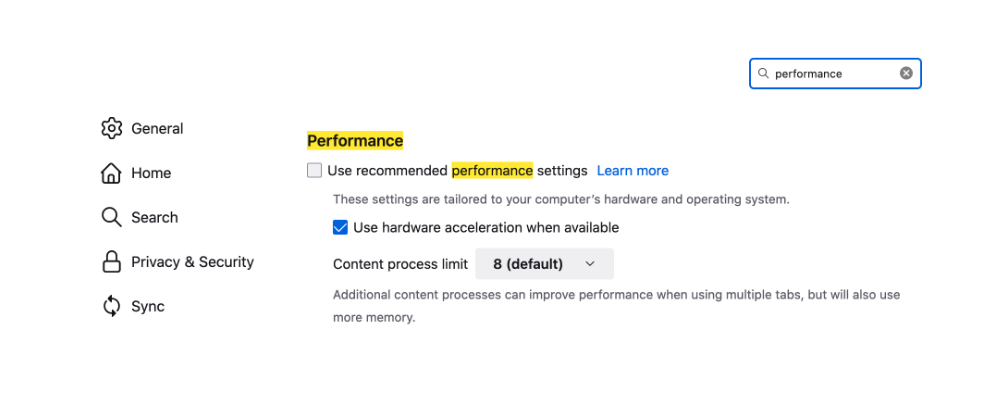

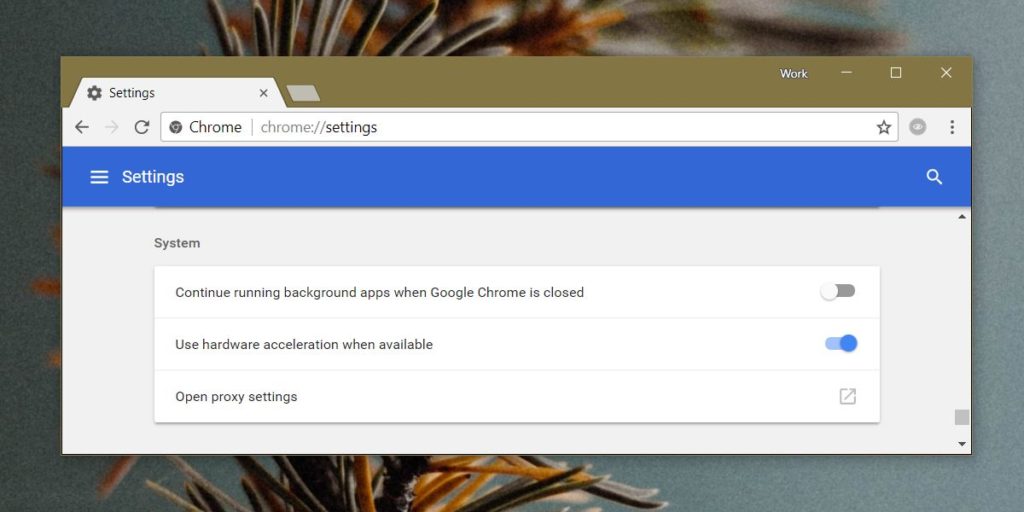

3. Evaluate Software Needs: Ensure that the software stack can leverage hardware acceleration. This may involve choosing compatible libraries, frameworks, or developing custom code.

4. Design System Architecture: Plan the architecture to integrate the hardware accelerator with existing systems. This includes determining data flow, interconnections, and interfaces.

5. Implementation: Install the hardware and develop or modify software to utilize the new capabilities. Test thoroughly to confirm that the system operates as intended.

6. Optimization: Fine-tune the system for performance. This may involve adjusting configurations, improving algorithms, or refining code to maximize efficiency.

Compatibility Factors for Integration

When integrating hardware acceleration into existing systems, it’s crucial to consider compatibility to avoid potential issues that could hinder performance. Key compatibility factors include:

– Hardware Interface Compatibility: Ensure that the accelerator can physically connect to the existing system’s bus (e.g., PCIe for GPUs).

– Operating System Support: Verify that the operating system supports the hardware and drivers required for optimal function.

– Software Compatibility: Assess whether existing applications can utilize the hardware acceleration capabilities or if modifications are necessary.

– Scalability: Choose hardware that allows for future upgrades and expansion to accommodate potential increases in processing demand.

Optimizing Performance of Hardware-Accelerated Systems

Maximizing the performance of a hardware-accelerated system involves several strategies that can lead to significant improvements. Consider the following optimization tips:

– Parallel Processing: Design applications to take full advantage of parallel processing capabilities offered by hardware accelerators. This can significantly enhance throughput and performance.

– Memory Management: Optimize memory usage by minimizing data transfer between the CPU and the accelerator, ensuring that data is local to the processing unit as much as possible.

– Use Efficient Algorithms: Implement algorithms specifically designed for acceleration. Algorithms that leverage parallelism and reduce computational complexity can yield better results.

– Profiling and Benchmarking: Continuously monitor system performance through profiling tools and benchmarking to identify bottlenecks and areas for improvement.

“Optimizing the architecture and algorithms is key to unlocking the true potential of hardware acceleration.”

Last Point

In conclusion, hardware acceleration represents a pivotal advancement in computing technology, revolutionizing how industries operate and solve problems. As we look ahead, the continuous evolution of hardware and software will drive further enhancements in performance, enabling even more sophisticated and resource-intensive applications. Staying informed about these developments will be crucial for anyone looking to harness the power of hardware acceleration in their endeavors.

FAQ Guide

What is the main advantage of hardware acceleration?

The main advantage is significantly enhanced performance, allowing for faster processing and improved efficiency in executing demanding tasks.

Can all applications benefit from hardware acceleration?

Not all applications can benefit; software must be specifically designed to leverage hardware acceleration for optimal results.

Is hardware acceleration cost-effective?

While initial setup costs can be high, the long-term performance benefits often outweigh the investment, especially for resource-intensive applications.

Are there any security risks associated with hardware acceleration?

Yes, hardware-accelerated systems can introduce unique vulnerabilities, requiring careful consideration and security measures during implementation.

How does hardware acceleration impact energy consumption?

Generally, hardware acceleration can lead to reduced energy consumption by completing tasks faster, although this may vary depending on the specific hardware used.