Neural Networks have revolutionized the way we approach problem-solving in technology and science. Inspired by the intricate workings of the human brain, these computational models mimic biological neural processes to tackle complex tasks efficiently. From image recognition to natural language processing, neural networks are at the forefront, reshaping various industries and enhancing our daily lives.

The journey into understanding neural networks begins with their biological inspiration, highlighting how artificial neurons operate similarly to their biological counterparts while also diverging in significant ways. This fascinating intersection of biology and technology sets the stage for exploring the architecture and mechanisms that empower neural networks, laying the groundwork for their vast array of applications.

Neural Networks and Their Biological Inspiration

The development of artificial neural networks (ANNs) has been significantly influenced by the structure and functioning of biological neural networks found in the human brain and nervous systems. Understanding this connection helps clarify how ANNs replicate certain cognitive tasks, making them powerful tools for a variety of applications. By mimicking the way biological neurons communicate and process information, artificial networks can learn, adapt, and perform complex tasks autonomously.

Biological neurons are specialized cells that transmit information through electrical and chemical signals. Each neuron consists of a cell body (soma), dendrites that receive inputs, and an axon that sends outputs to other neurons. These neurons communicate through synapses, where neurotransmitters facilitate signal transmission. In contrast, artificial neurons are simplified mathematical models that receive inputs, apply weights to these inputs, and produce an output through an activation function. Despite their differences, both biological and artificial neurons share some similarities in their basic operations: they process inputs to produce outputs and adjust their connections based on experience, effectively learning from the environment.

The tasks artificial neural networks excel at often parallel biological processes. For instance, image recognition is a task where ANNs shine, similar to how the human brain effortlessly recognizes faces and objects. The convolutional neural network (CNN), a type of ANN, can analyze pixel patterns in images, much like how the visual cortex processes visual stimuli. Other examples include natural language processing (NLP), where ANNs are capable of understanding and generating human language. This mimics how humans learn language through exposure and experience, allowing machines to comprehend context, tone, and meaning.

The ability of ANNs to perform tasks such as speech recognition, predictive text generation, and even playing complex games like chess or Go illustrates their effectiveness in imitating certain cognitive functions. These parallels between biological and artificial systems underline a fascinating intersection of neuroscience and computer science, paving the way for continued advancements in intelligent systems.

Similarities and Differences between Biological and Artificial Neurons

The discussion of similarities and differences between biological and artificial neurons is essential in understanding neural networks. While both types of neurons process information, their operational mechanisms vary significantly. Below are key points highlighting these aspects:

- Structure: Biological neurons have a complex structure with dendrites, axons, and synapses, while artificial neurons are simpler, often represented as nodes in a computational model.

- Signal Transmission: Biological neurons communicate via electrical impulses and chemical neurotransmitters, whereas artificial neurons use numerical data and mathematical functions to simulate processing.

- Learning Mechanism: Biological neurons adjust their synaptic strength based on experience (neuroplasticity), while artificial neurons modify weights through algorithms like backpropagation during training.

- Processing Speed: Biological neurons operate at a slower speed due to the time it takes for chemical transmission, while artificial neurons can process data at incredibly fast rates, enabling real-time computations.

The study of these similarities and differences not only enhances our understanding of both systems but also fuels innovations in artificial intelligence, allowing researchers to develop more sophisticated and efficient neural networks.

The Architecture of Neural Networks

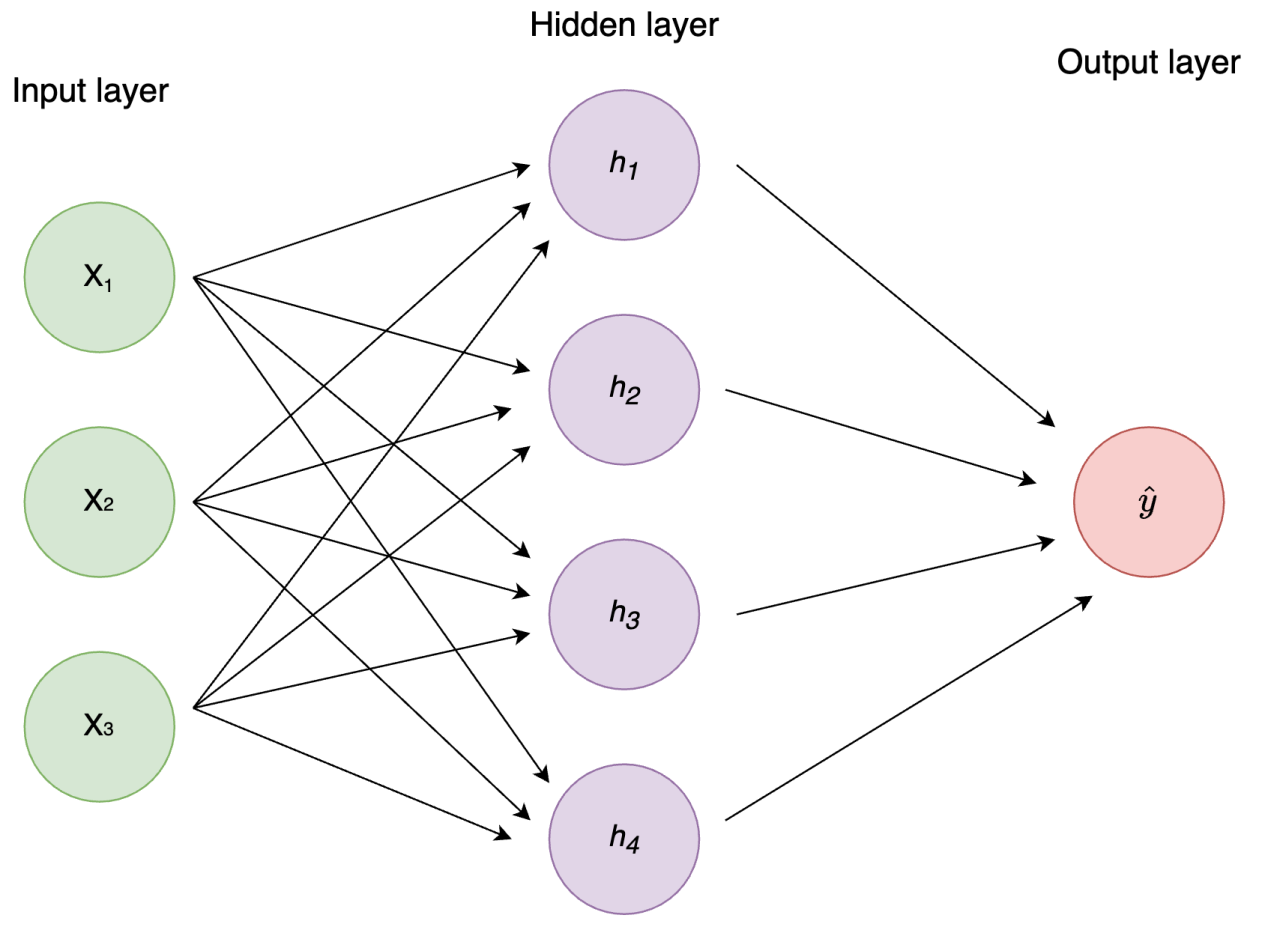

Neural networks are structured to mimic the way the human brain works, and their architecture plays a crucial role in determining their functionality and effectiveness. The architecture consists of layers of interconnected nodes, or neurons, which facilitate the processing of information. Each layer serves a specific purpose, contributing to the overall capability of the neural network to learn and make predictions based on input data.

The architecture of a neural network typically includes three main types of layers: input layers, hidden layers, and output layers.

Layers in a Neural Network

The input layer is the first layer of the network and serves as the entry point for the data. Each neuron in this layer corresponds to a feature in the dataset. The hidden layers, which can consist of one or more layers, perform the bulk of the computation. They apply weights to the inputs, transforming them through neurons’ activation functions to extract complex patterns and relationships. The final output layer produces the result based on the information processed through the previous layers. Each neuron in this layer represents a potential output class or predicted variable.

Activation functions are pivotal in determining how the input signals are transformed at each neuron. They introduce non-linearity into the network, allowing it to learn from a diverse range of data patterns. Common activation functions include the sigmoid function, which squashes output between 0 and 1, the hyperbolic tangent (tanh), which outputs values between -1 and 1, and the Rectified Linear Unit (ReLU), which outputs the input directly if positive and zero otherwise. These functions help in optimizing learning by enabling the network to adjust its weights effectively.

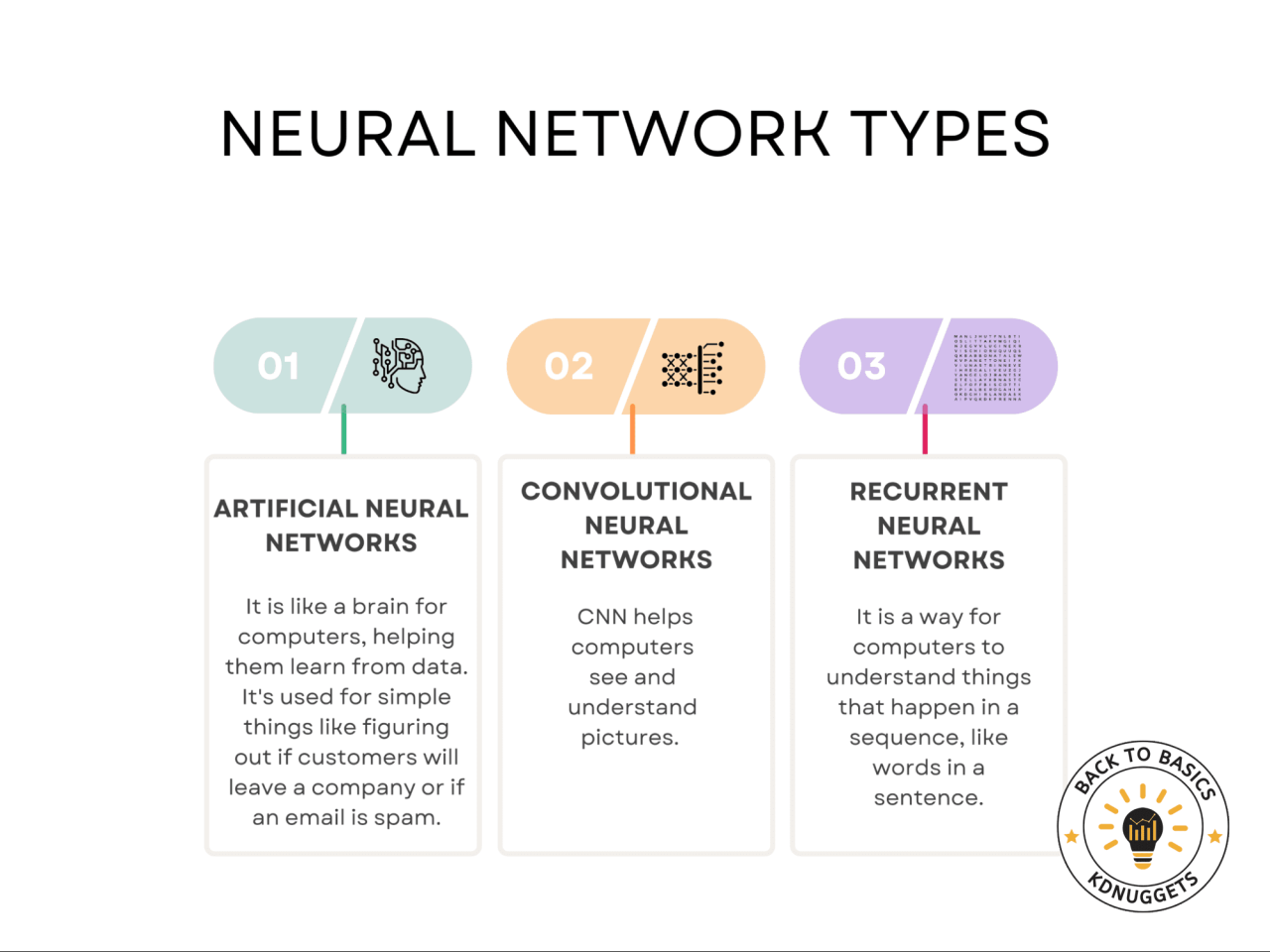

To illustrate the variety of neural network architectures in use, the following comparison highlights key characteristics of different types:

| Architecture Type | Description | Applications |

|---|---|---|

| Feedforward Neural Networks | Information moves in one direction, from input to output. | Classification problems like image and speech recognition. |

| Convolutional Neural Networks (CNNs) | Specialized for processing grid-like data, such as images. | Image classification, object detection, and computer vision tasks. |

| Recurrent Neural Networks (RNNs) | Utilizes loops to allow information from previous steps to influence current processing. | Sequential data analysis such as time series forecasting and natural language processing. |

Training Neural Networks

The training of neural networks is a critical phase in developing effective machine learning models. It involves adjusting the network’s weights and biases to minimize the error in predictions. This process is highly iterative and relies on various optimization techniques to enhance performance. Understanding the training process, including methods like backpropagation and gradient descent, is essential for building robust neural networks.

Backpropagation is a key algorithm used in training neural networks. It efficiently computes the gradient of the loss function with respect to each weight by applying the chain rule, enabling the algorithm to propagate errors backward through the network. This process allows for the adjustment of weights to reduce prediction errors. Gradient descent is one of the most common optimization algorithms used in conjunction with backpropagation. It updates the weights by moving them in the opposite direction of the gradient of the loss function, thus minimizing the error iteratively.

Optimization Techniques and Challenges

Training neural networks is fraught with challenges that can impede convergence and the ability to generalize. Some common challenges include overfitting, vanishing gradients, and poor convergence. Techniques such as regularization, batch normalization, and adaptive learning rate methods can help mitigate these issues.

– Overfitting occurs when a model learns the training data too well, including noise and outliers, which negatively affects its performance on unseen data. Regularization techniques, such as L1 and L2 regularization, help to prevent overfitting by adding a penalty to the loss function for large weights.

– Vanishing gradients can occur in deep networks, where the gradients become too small for effective weight updates. Techniques like the use of ReLU (Rectified Linear Unit) activation functions and skip connections in architectures like ResNet can alleviate this problem.

– Poor convergence can be addressed by selecting appropriate optimization algorithms. Adam and RMSprop are popular variants of gradient descent that adapt the learning rate for each weight, often leading to faster convergence.

In order to efficiently train a neural network, adhering to best practices is essential. Here are several key strategies to consider:

– Utilize a well-structured validation dataset to monitor performance and prevent overfitting.

– Implement cross-validation to ensure the model’s robustness across different subsets of data.

– Experiment with different neural network architectures and hyperparameters to discover the most effective configurations.

– Normalize or standardize input data to improve convergence speed and accuracy.

– Use dropout layers to enhance model generalization by preventing over-reliance on specific neurons during training.

– Consider leveraging transfer learning to utilize pre-trained models when applicable, saving both time and resources.

– Regularly visualize training metrics such as loss and accuracy to identify potential issues early in the training process.

By incorporating these optimization techniques and adhering to best practices, the training of neural networks can be made more efficient and effective, resulting in models that perform well on both training and unseen data.

Applications of Neural Networks in Various Industries

Neural networks have emerged as a revolutionary technology, enhancing processes and decision-making across multiple industries. Their ability to analyze vast amounts of data, recognize patterns, and deliver predictive insights transforms how businesses operate. This section explores the significant impact of neural networks in healthcare, finance, and autonomous vehicles, emphasizing their innovative applications.

Healthcare Applications

Neural networks are making significant inroads into the healthcare industry by improving diagnostics, personalizing treatment plans, and optimizing hospital operations.

– One notable application is in medical imaging, where convolutional neural networks (CNNs) are employed to detect anomalies in X-rays, MRIs, and CT scans. For instance, Google’s DeepMind has developed systems that can identify eye diseases from retinal scans with a high degree of accuracy.

– Additionally, neural networks analyze patient data to predict disease outbreaks, personalize medicine, and streamline administrative tasks, leading to improved patient outcomes and reduced costs.

– In drug discovery, neural networks accelerate the identification of potential drug candidates by predicting molecular interactions, which can significantly shorten the time to bring new drugs to market.

Finance Applications

In the finance sector, neural networks are transforming how companies assess risk, detect fraud, and manage investments. Their capability to process and analyze large datasets enables financial institutions to make more informed decisions.

– For example, banks use neural networks for credit scoring, evaluating the creditworthiness of borrowers by analyzing various factors that traditional models may overlook. This leads to more accurate lending decisions and reduced default rates.

– Fraud detection systems leverage neural networks to monitor transactions in real-time, identifying unusual patterns that may indicate fraudulent behavior. Companies like PayPal utilize such systems to safeguard transactions and protect user accounts effectively.

– Moreover, algorithmic trading platforms employ neural networks to process market data and make trading decisions based on patterns and trends, leading to optimized investment strategies.

Autonomous Vehicles Applications

The autonomous vehicle industry is heavily reliant on neural networks to enhance safety, navigation, and overall vehicle performance.

– Neural networks process data from various sensors, including cameras and LIDAR, to recognize objects on the road, paving the way for real-time responses to dynamic driving conditions. Tesla’s Autopilot system is a prime example, utilizing neural networks to enable features such as lane-keeping and automated parking.

– Additionally, these networks assist in route optimization, helping vehicles determine the most efficient paths by analyzing traffic patterns and road conditions. This capability not only improves travel times but also reduces fuel consumption and emissions.

– Furthermore, neural networks contribute to enhancing user experience by enabling voice recognition systems within vehicles, allowing drivers to control navigation and entertainment systems hands-free.

Future Trends and Developments in Neural Networks

The rapid evolution of neural networks has set the stage for transformative advancements across various industries. As we look ahead, several key trends are emerging that signal the potential for profound impacts on society, ranging from healthcare to entertainment. These developments not only promise greater efficiency but also raise important ethical considerations that must be addressed.

Emerging Techniques in Neural Networks

Two particularly noteworthy techniques in the realm of neural networks are Generative Adversarial Networks (GANs) and Transfer Learning. Both have unique applications and implications for the future.

Generative Adversarial Networks (GANs) consist of two neural networks, the generator and the discriminator, which work against each other. The generator creates data that mimics real-world data, while the discriminator evaluates and distinguishes between real and generated data. This innovative approach is gaining traction in areas like image and video generation, art creation, and even drug discovery. The potential for GANs extends to creating highly realistic simulations, which could revolutionize industries such as gaming and virtual reality.

On the other hand, Transfer Learning leverages pre-trained models to solve new problems with limited data. This approach significantly reduces the time and resources needed for model training and is especially beneficial in fields where labeled data is scarce. For instance, a model trained on a large dataset for image recognition can be fine-tuned for medical imaging with substantially less data, enhancing diagnostic capabilities. This adaptability fosters innovation, allowing businesses to expedite the development of AI-driven applications.

Ethical Considerations in Neural Network Advancements

As neural networks continue to evolve, ethical considerations become paramount. The following points highlight significant ethical dilemmas that accompany the growth of this technology:

- Data Privacy and Security: The use of large datasets raises concerns about user privacy. Organizations must ensure compliance with data protection regulations, emphasizing transparency in data usage.

- Bias and Fairness: Neural networks can inadvertently perpetuate biases present in training data, leading to unfair outcomes in applications such as hiring or lending. Addressing these biases is critical to fostering equitable AI systems.

- Accountability: The complexity of neural network decisions creates challenges in accountability. Determining responsibility in cases of failure or harm is an ongoing ethical debate in AI ethics.

- Job Displacement: Automation driven by neural networks may lead to job displacement in certain sectors, necessitating discussions on workforce retraining and support for affected individuals.

By engaging with these ethical considerations, industry leaders and policymakers can help guide the responsible development of neural networks, balancing innovation with societal welfare.

Ending Remarks

As we conclude our exploration of neural networks, it becomes clear that their impact is both profound and far-reaching. From improving decision-making processes in healthcare and finance to driving innovation in autonomous vehicles, the potential of neural networks is just beginning to unfold. With ongoing advancements and ethical considerations in mind, the future promises exciting developments that could redefine our interaction with technology and its role in society.

FAQs

What are neural networks used for?

Neural networks are used for tasks such as image and speech recognition, natural language processing, and predictive analytics across various industries.

How do neural networks learn?

Neural networks learn through a process called training, which involves adjusting their connections based on the input data and the desired output using techniques like backpropagation.

What is overfitting in neural networks?

Overfitting occurs when a neural network learns the training data too well, capturing noise rather than the underlying patterns, leading to poor performance on new data.

Can neural networks be used for real-time applications?

Yes, neural networks can be optimized for real-time applications like autonomous driving and live video processing, but require careful design and training.

What are the ethical concerns regarding neural networks?

Ethical concerns include issues of bias in training data, privacy violations, and the potential for job displacement due to automation.